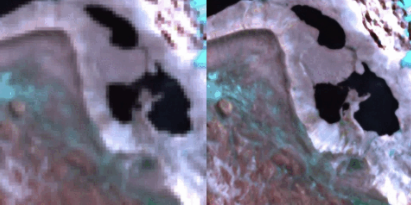

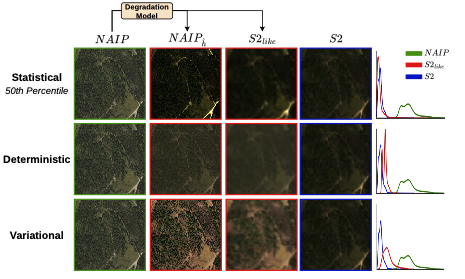

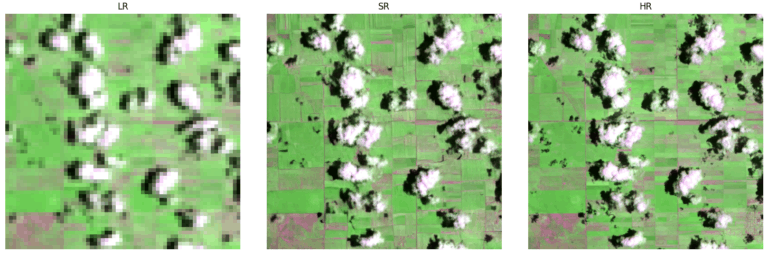

SEN2NAIP is a large-scale dataset designed to support super-resolution in remote sensing by pairing low-resolution Sentinel-2 images with high-resolution NAIP imagery. It includes 2,851 original LR-HR pairs and over 35,000 synthetic pairs generated via a custom degradation model, enabling the development of models to enhance Sentinel-2 spatial resolution.

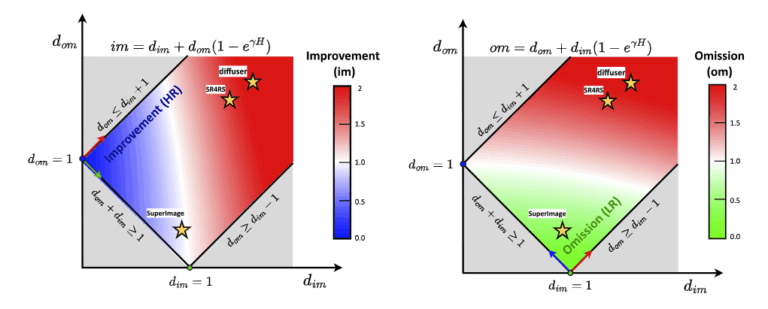

In recent years, increased attention has been given to image super-resolution (SR) techniques in remote sensing, which are aimed at reconstructing high-resolution imagery from low-resolution sources. To address ongoing challenges in evaluation, OpenSR-test has been presented as a comprehensive benchmark specifically designed for assessing SR in remote sensing, featuring tailored quality metrics and curated cross-sensor datasets.

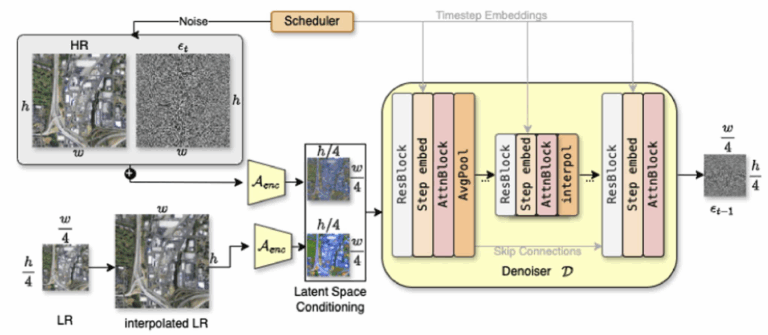

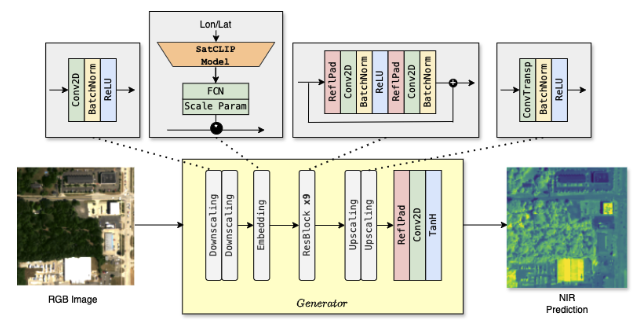

A computationally efficient latent diffusion model is proposed for super-resolving Sentinel-2 imagery from 10 m to 2.5 m, with both visible and NIR bands incorporated and conditioned on the input to preserve spectral fidelity. Unlike previous approaches, pixel-level uncertainty maps are generated, allowing the reliability of the enhanced imagery to be assessed for critical remote sensing tasks.

A new framework, SEN2SR, was proposed to super-resolve Sentinel-2 images while preserving spectral and spatial consistency, using harmonized synthetic training data and a low-frequency constraint to minimize artifacts. Superior performance in resolution enhancement and downstream tasks was achieved and evaluated across various deep learning architectures with the aid of Explainable AI techniques.