We’re excited to announce that the full codebase and pretrained weights of our multispectral diffusion-based super-resolution model for Sentinel-2 imagery are now available as open source! This marks a major step toward democratizing high-quality, trustworthy remote sensing products by enabling researchers, practitioners, and organizations to upscale freely available low-resolution satellite imagery using cutting-edge generative models.

Model Overview

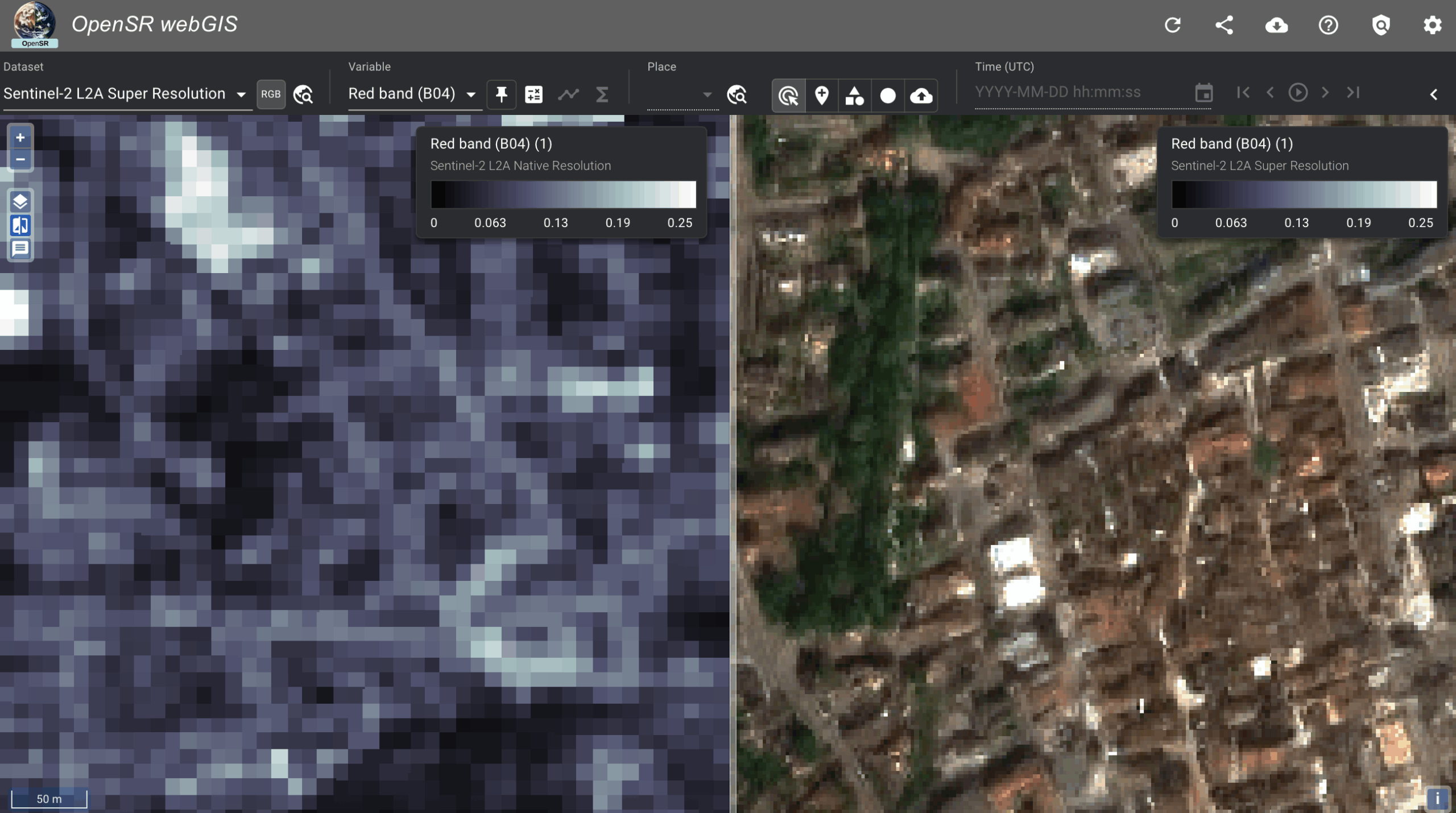

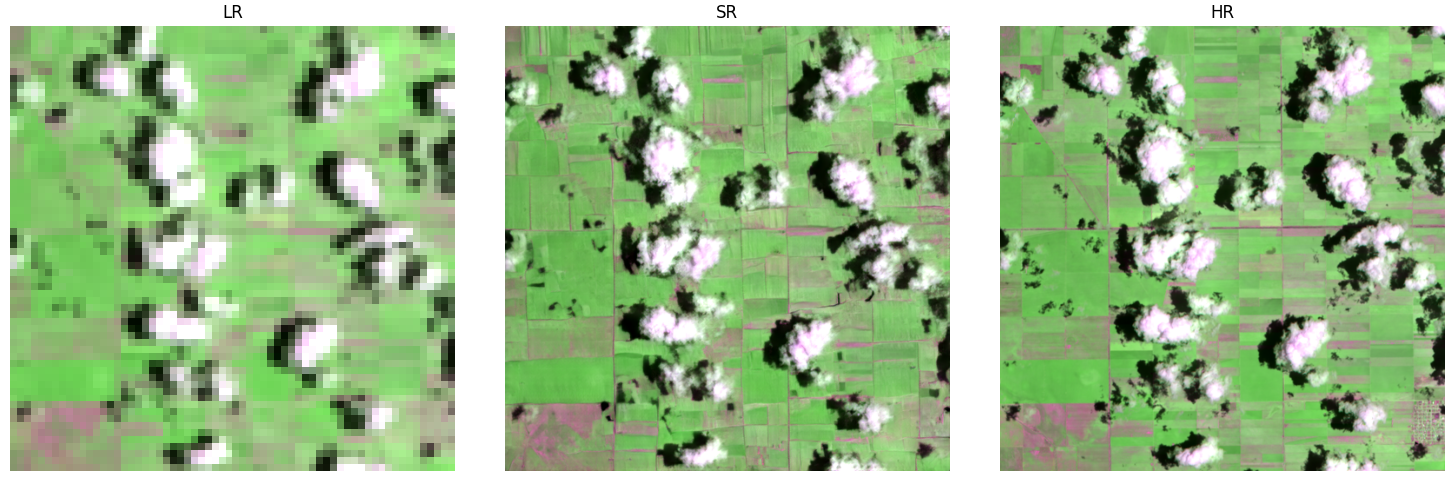

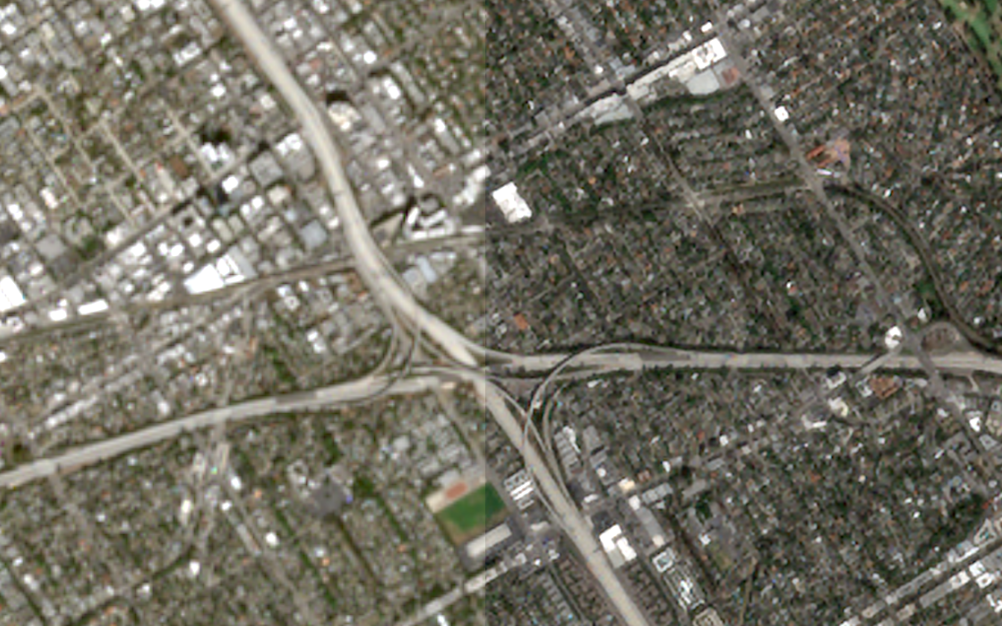

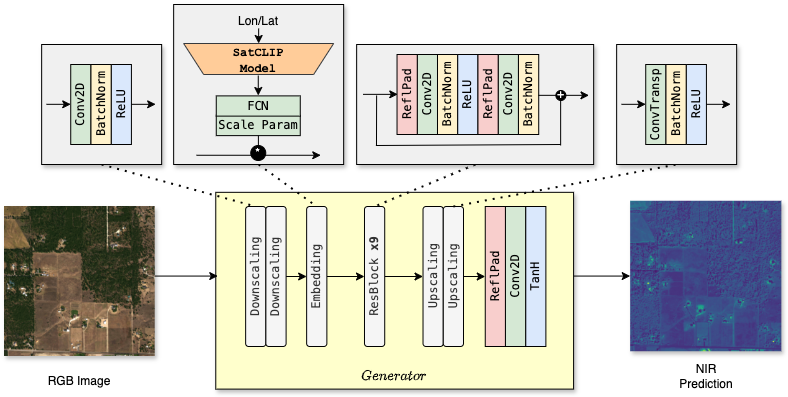

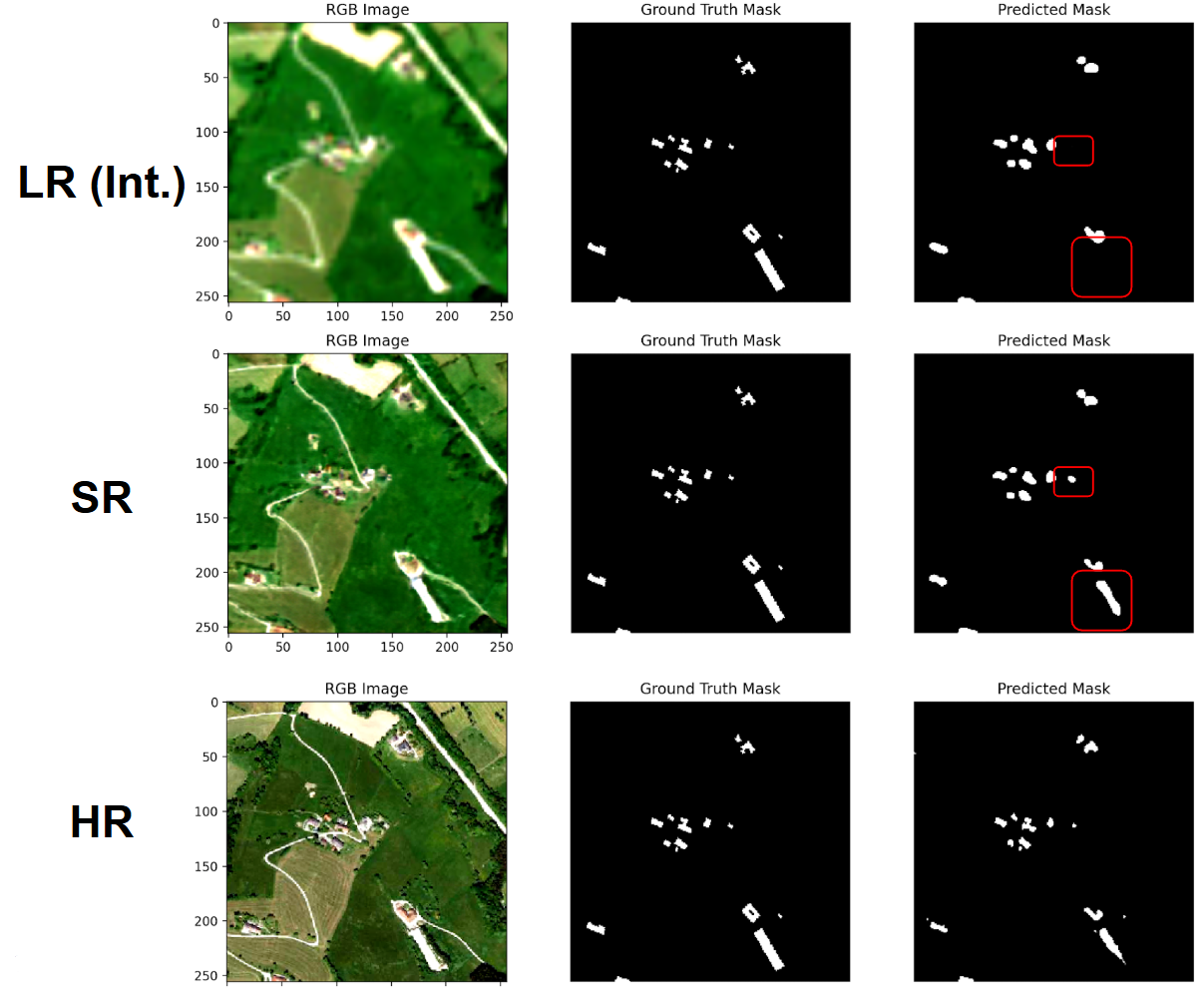

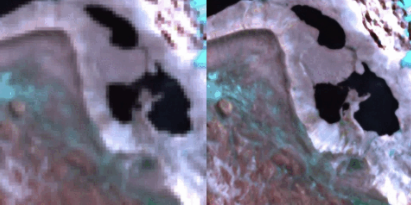

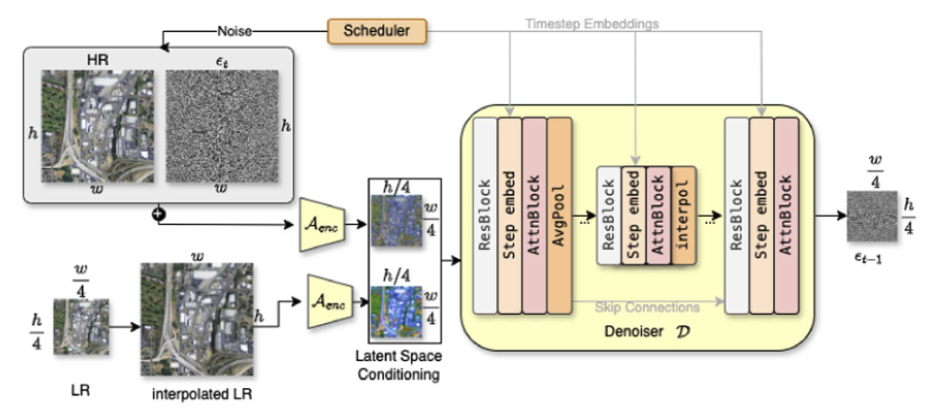

Published in the IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, our model is the first diffusion-based framework specifically designed for large-scale remote sensing super-resolution. It leverages a latent diffusion architecture, carefully adapted to handle multispectral Sentinel-2 inputs (including visible and NIR bands) and generate high-resolution (10 → 2.5 m) outputs. Unlike conventional image diffusion methods, we condition the generative process directly on the low-resolution input, preserving spectral integrity and suppressing hallucinations.

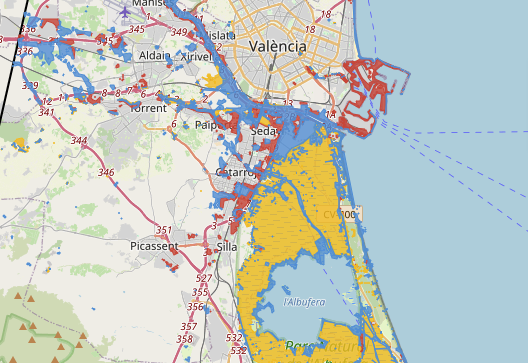

One of the key innovations is the generation of pixel-level uncertainty maps, allowing users to assess the confidence of the SR output—an essential tool for applications like environmental monitoring, land cover mapping, and change detection, where reliability matters as much as resolution.

Get Started

You can now access the full repository, training and inference scripts, and pretrained weights on GitHub and Hugging Face. Also check out the interactive example nobotebooks on Colab (via GitHub). We hope this open release accelerates research and real-world deployment of generative models in Earth observation. Please note that the repository holds the model only – if you plan to use this model in your existing workflows, visit the Get Started page to profit from he whole software package that we release to make the implementations easier.