Get Started with ESA OpenSR

Get Started with ESA OpenSR

Welcome to ESA OpenSR – your launchpad into advanced super-resolution for Sentinel-2 imagery. Our ecosystem comprises cutting-edge tools that take low-resolution multispectral imagery and transform it into actionable high-res insights at 2.5 m spatial resolution. The utilities provide boiler-plate code that handles the downloading, preprocessing, super-resolution, and postprocessing for all 10 and 20m Sentinel-2 bands. The packages include patching operations, so that you can input large images or whole Sentinel-2 tiles in order to embedd these models and products into your workflows.

No installations, no environments, no data downloads – just select your region and let the Colab Notebook create your SR Product.

Flagship RGB-NIR Model: LDSR-S2

Flagship RGB-NIR Model: LDSR-S2

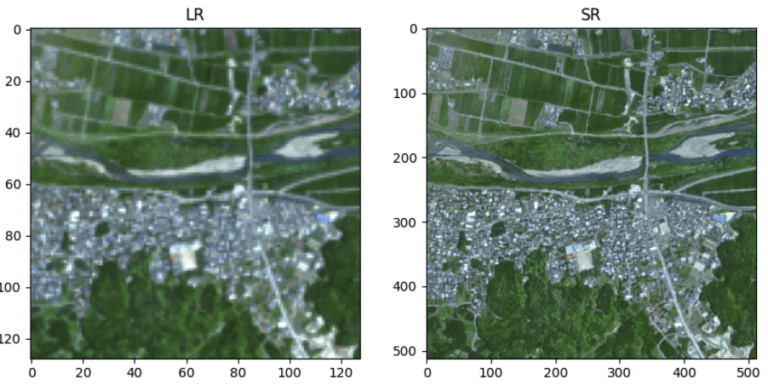

At the core of our super-resolution ecosystem is LDSR-S2, a state-of-the-art latent diffusion model tailored for enhancing Sentinel-2 imagery. Specifically, LDSR-S2 targets the 10 m bands – red (B04), green (B03), blue (B02), and near-infrared (B08) – and reconstructs them at a spatial resolution of 2.5 m. The model operates in a compressed latent space, enabling both efficient inference and high-quality texture generation while preserving global scene structure.

Unlike conventional pixel-domain methods, LDSR-S2 leverages DDIM sampling in a compressed latent space, conditioned on the original 10 m observations. This setup allows the model to iteratively refine its predictions by injecting structured noise during sampling, ultimately generating high-frequency spatial details that are consistent with the low-resolution input. Importantly, LDSR-S2 not only enhances visual sharpness but also quantifies the sampling uncertainty, offering per-pixel confidence estimates – a critical feature for scientific and operational reliability. This model’s uncertainty map reflects the confidence level of the super-resolved output, highlighting areas where the model was less certain in reconstructing fine-grained spatial features. This is particularly useful in heterogeneous or occluded regions such as urban regions, industrial areas, or complex vegetation patches. These uncertainty estimates can be generated alongside the super-resolved output using the demo.py script or directly from the API with a simple flag.

Once the RGB+NIR bands have been enhanced, the high-resolution outputs can be passed to the SEN2SR framework, which uses them as a spatial reference to super-resolve the remaining spectral bands. This cascaded approach guarantees both fidelity and consistency across the entire Sentinel-2 stack.

Installing the opensr-model via pip ins the easiest way to run the model itself. It enables the usage of both the model and pretrained checkpoints, but contains no supplementary code that helps users embedd this model in their workflows. This repository is therefore most suited for adaptation and usage by other researches and people interested in playing with the code.

The Full-Band SR Engine: SEN2SR

The Full-Band SR Engine: SEN2SR

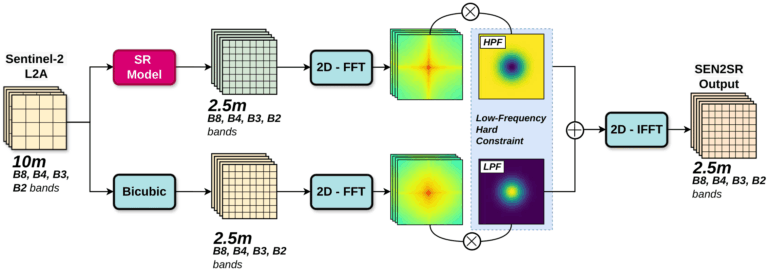

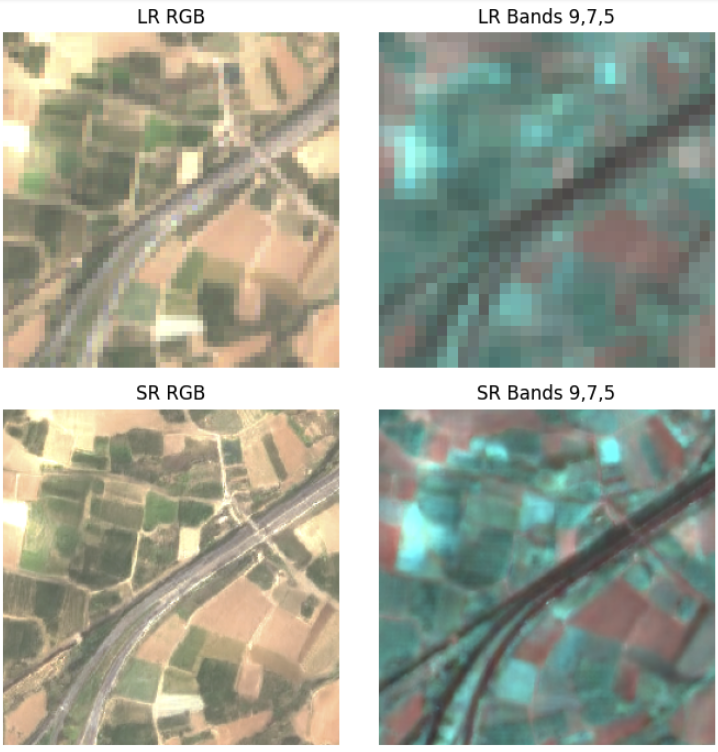

SEN2SR is our radiometrically and spatially consistent super-resolution pipeline for Sentinel-2 imagery. It is designed to enhance both the 10 m RGB+NIR and the 20 m red-edge and SWIR bands to a unified resolution of 2.5 meters, ensuring precise spectral fidelity and geometric alignment across the stack. Unlike traditional SR methods that risk hallucinating false textures or introducing spectral distortion, SEN2SR is built on a physically grounded and explainable framework, integrating:

Most deep learning super-resolution models struggle when transitioning from synthetic training data to real-world satellite imagery. This often leads to undesirable effects such as visual artifacts, spatial misalignments, and spectral hallucinations that compromise the reliability of downstream applications. SEN2SR addresses these challenges through a carefully engineered pipeline. It is trained on SEN2NAIPv2, a harmonized synthetic dataset explicitly designed to mimic the spectral and spatial characteristics of Sentinel-2 imagery, thereby minimizing domain shift. Furthermore, the framework enforces a rigorous super-resolution protocol that constrains the reconstructed low-frequency components of the output to align with the original Sentinel-2 input, ensuring physical and spectral consistency. As a result, SEN2SR delivers robust and artifact-free outputs across a wide range of land cover types – from densely built urban environments to heterogeneous agricultural regions – maintaining both structural fidelity and radiometric realism.

The package can be run on any type of reference RGB-NIR model, but comes with lightweight SWIN implementations.

Quickstart

Quickstart

Installing the sen2sr package via pip is the recommended way to run full-spectrum super-resolution of Sentinel-2 imagery. It includes pretrained models, inference utilities, and a clean API for applying spatially and radiometrically consistent super-resolution to both 10 m and 20 m bands. Unlike opensr-model, sen2sr comes with end-to-end support for large-scene processing, chunked inference, and harmonization of spectral outputs – making it ideal for direct use in scientific pipelines or production workflows.

The package wraps complex model orchestration under the hood and is focused on ease-of-use for applied users in Earth observation, agriculture, and environmental monitoring.

Using SEN2SR and LDSR-S2 to generate SR Products

Using SEN2SR and LDSR-S2 to generate SR Products

To perform full-scene super-resolution of Sentinel-2 imagery down to 2.5 m resolution, the flagship latent diffusion model and the SEN2SR package can be used in a complementary and modular workflow. The process starts by isolating the 10 m bands (B02, B03, B04, B08 — corresponding to blue, green, red, and NIR), which are passed to the latent diffusion super-resolution model (opensr-model). This model operates in the latent space to generate 2.5 m RGBN predictions with high spatial fidelity and intrinsic uncertainty quantification. Once the 10 m bands are super-resolved, the SEN2SR package takes over to upsample the remaining 20 m bands (B05–B07 for red edge, B11–B12 for SWIR, and optionally B8A for NIR narrow) in a Wald-guided fashion, using the already-enhanced RGBN predictions as a spatial reference. This ensures that all bands are spatially aligned and consistent at the target resolution. The entire process can be executed programmatically in Python using the two packages in tandem, yielding a seamless, scientifically grounded super-resolution output suitable for Earth observation applications.

📄 LDSR-S2 Paper: IEEE JSTARS

📄 SEN2SR Preprint: SSRN

If you use these models or packages in your work, please cite

@ARTICLE{donike2025LDSRS2,

author={Donike, Simon and Aybar, Cesar and Gómez-Chova, Luis and Kalaitzis, Freddie},

journal={IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing},

title={Trustworthy Super-Resolution of Multispectral Sentinel-2 Imagery With Latent Diffusion},

year={2025},

volume={18},

pages={6940-6952},

doi={10.1109/JSTARS.2025.3542220}}

@ARTICLE{aybar2025SEN2SR,

title={A Radiometrically and Spatially Consistent Super-Resolution Framework for Sentinel-2},

author={Aybar, Cesar and Contreras, Julio and Donike, Simon and Portalés-Julià,

Enrique and Mateo-García, Gonzalo and Gómez-Chova, Luis},

journal={SSRN Electronic Journal},

year={2025},

note={Available at \url{http://dx.doi.org/10.2139/ssrn.5247739}},

doi={10.2139/ssrn.5247739}}

Still Experimental, but Actively Evolving

Still Experimental, but Actively Evolving

This ecosystem is research-grade, but production-ready releases are around the corner. Join our mission to make trusted, open super-resolution available to everyone by colaborating on our GitHub repositories and engaging with other users.