NIR-GAN: Synthesizing Near-Infrared from RGB with Location Embeddings and Task-Driven Losses

We’re excited to share that our work, “Near-Infrared Band Synthesis From Earth Observation Imagery With Learned Location Embeddings and Task-Driven Loss Functions,” has been published open access by IEEE.

Most remote sensing workflows depend on near-infrared (NIR) information—think NDVI/NDWI for vegetation and water—but many RGB-only archives simply don’t have it. NIR-GAN closes that gap by learning to generate a realistic NIR band directly from RGB, so practitioners can compute familiar indices and train multispectral models even when NIR isn’t available.

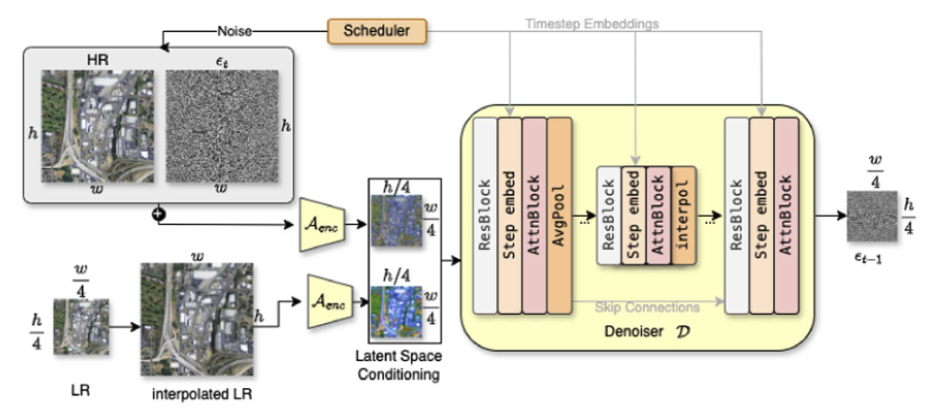

What we built

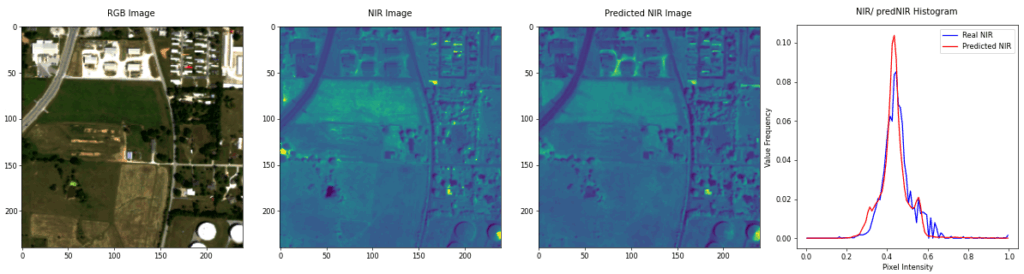

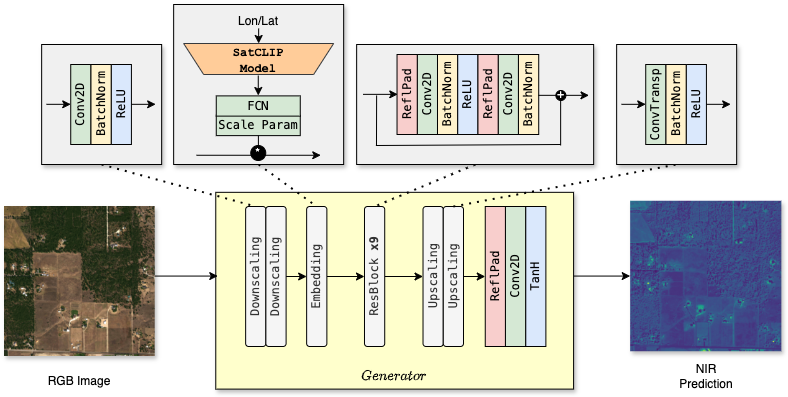

NIR-GAN (conditional GAN): An image-to-image model that predicts NIR from RGB.

Learned location embeddings: Compact vectors encoding geographic & climatic context to improve generalization across regions and sensors.

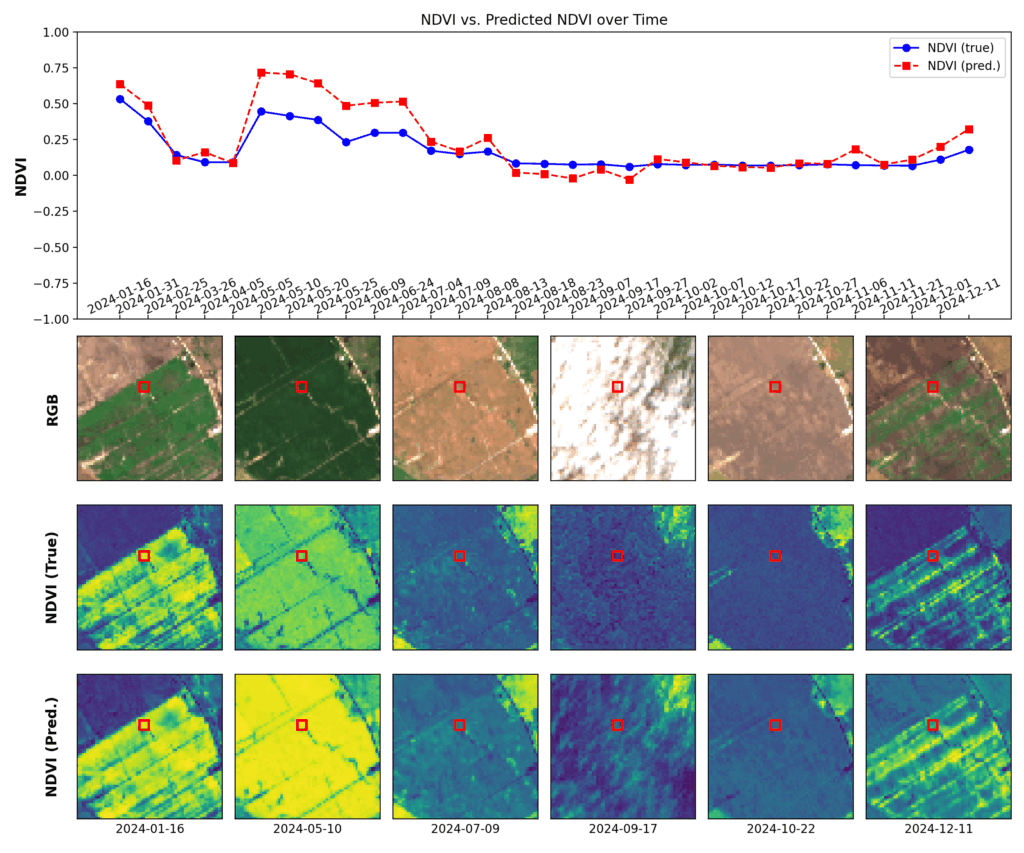

Task-driven loss design: Differentiable, index-derived losses (e.g., NDVI/NDWI) that optimize the synthesized NIR specifically for downstream RS tasks—not just for pixel fidelity.

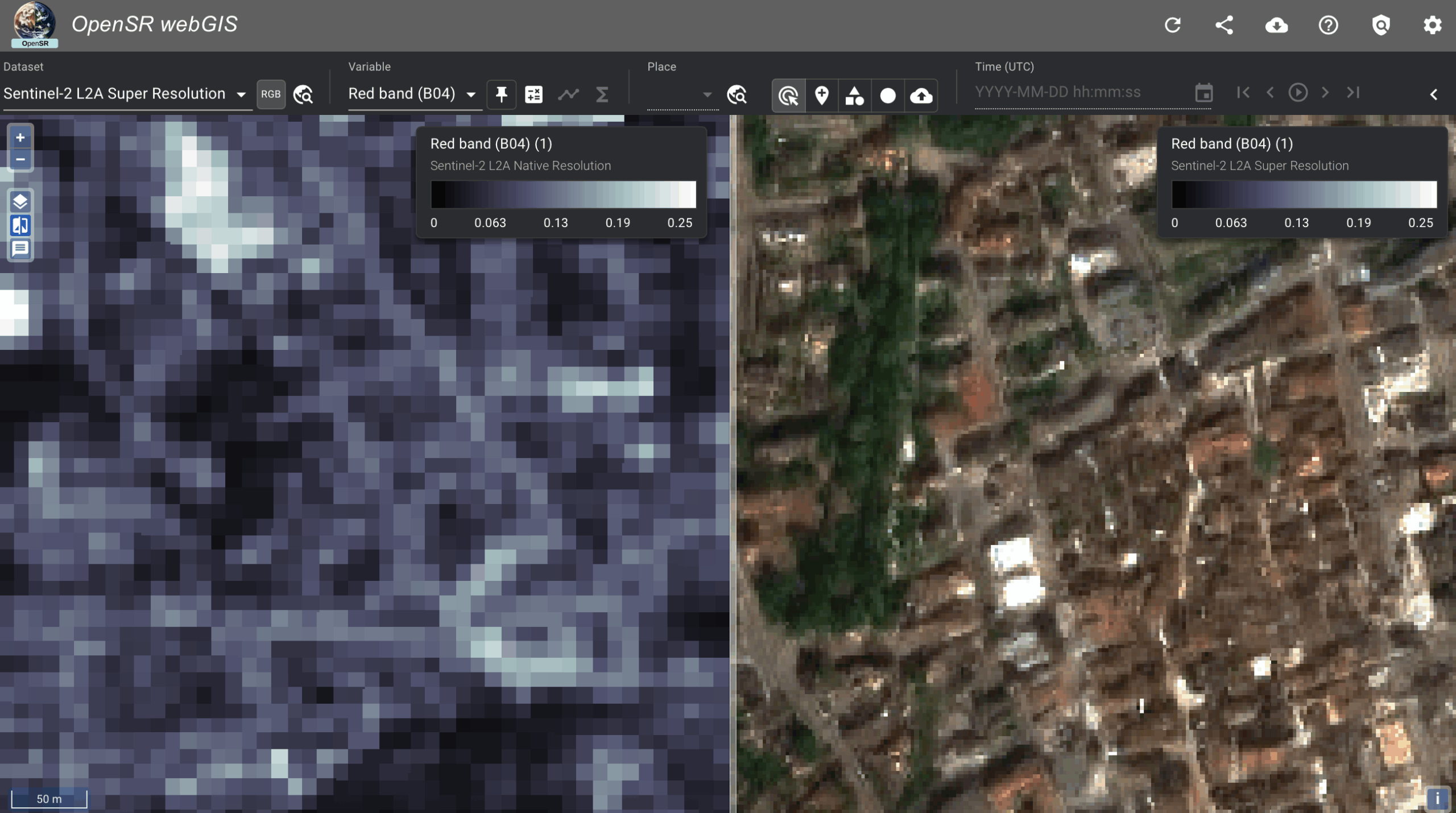

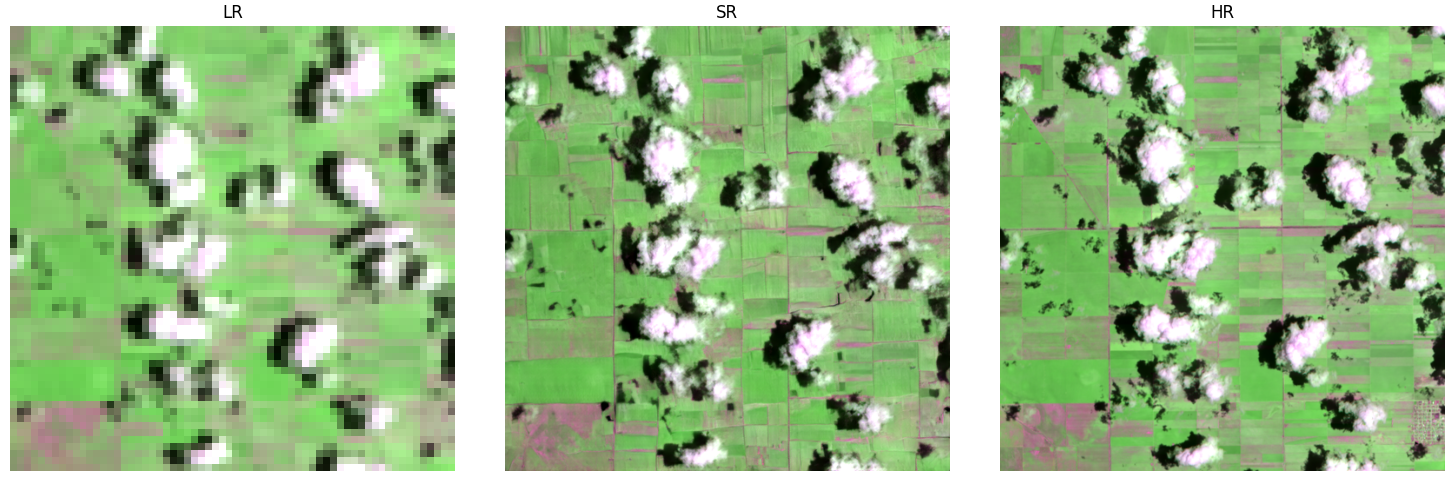

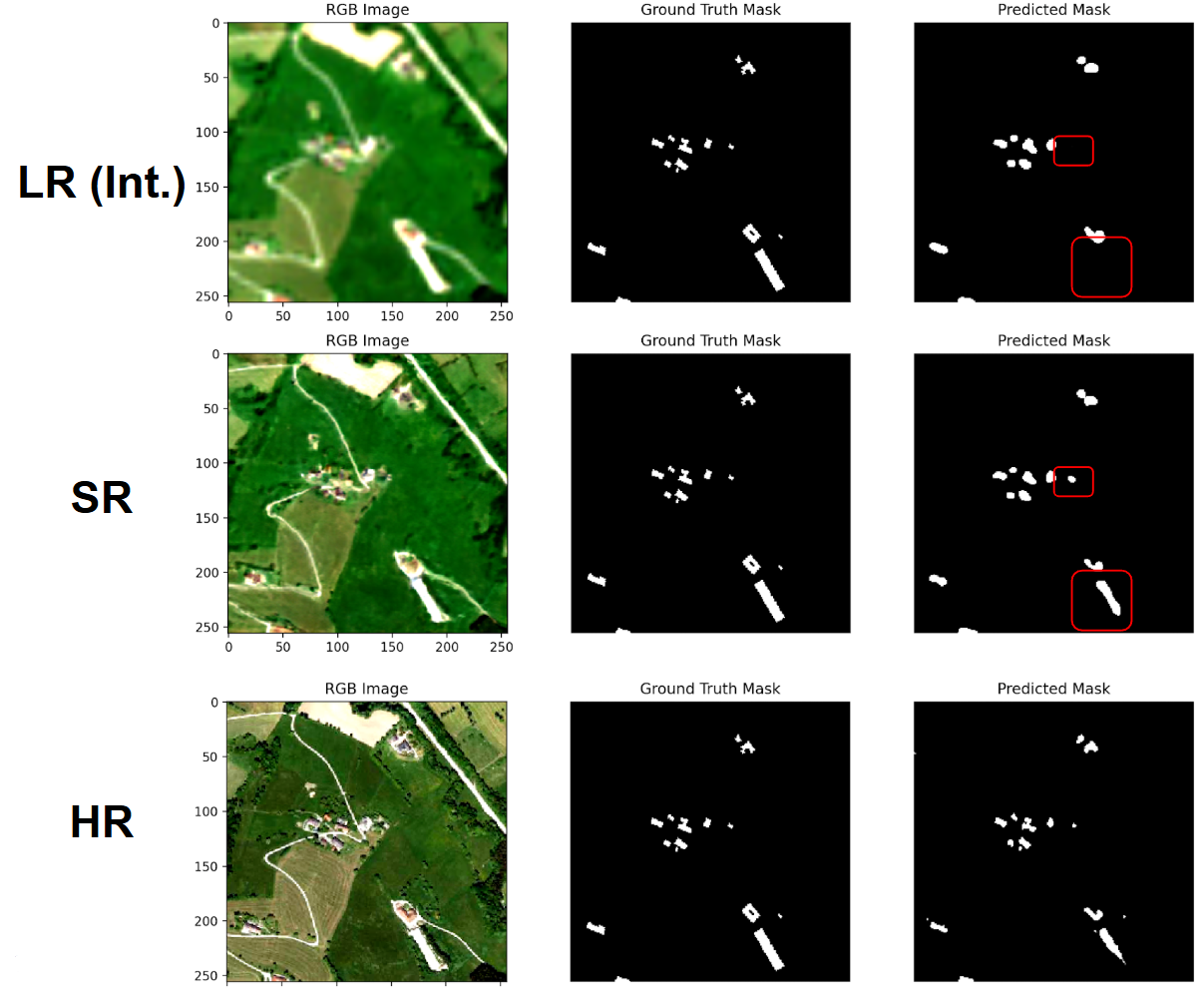

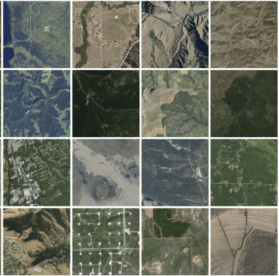

Cross-sensor robustness: Effective across data sources with different spectral/spatial characteristics.

Results at a glance

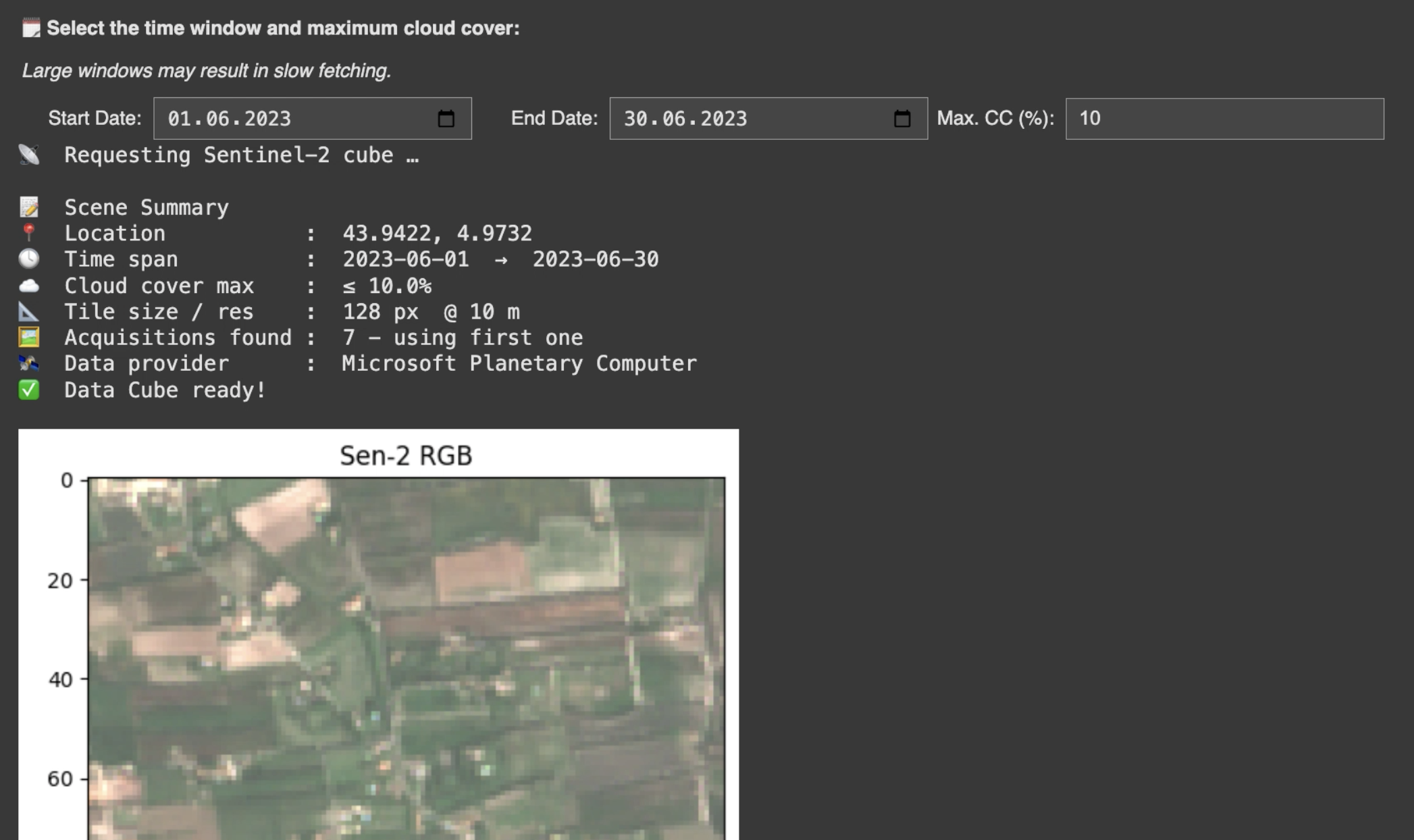

Up to 26.6 dB PSNR on Sentinel-2 for the synthesized NIR band.

Visually realistic outputs that preserve structural patterns relevant for vegetation/water monitoring.

Enables creation of partly synthetic multispectral datasets, improving training coverage where NIR is missing.

Why it matters

Compute NDVI/NDWI and related indices on RGB-only archives.

Augment training data for multispectral models without expensive acquisitions.

Increase coverage for land-cover mapping, change detection, and environmental monitoring where NIR is unavailable.

🔗 Read the full article (Open Access): IEEE Xplore