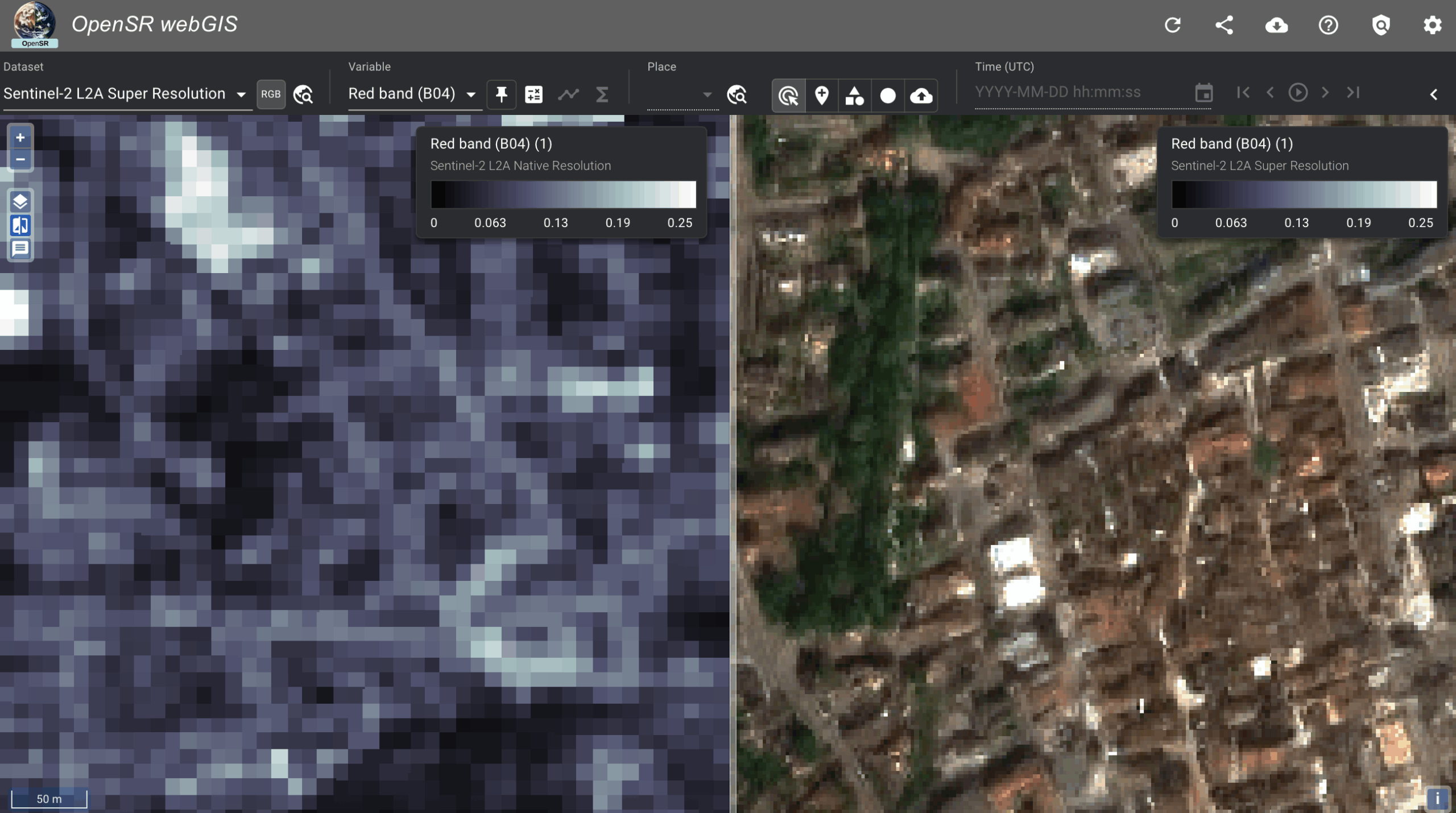

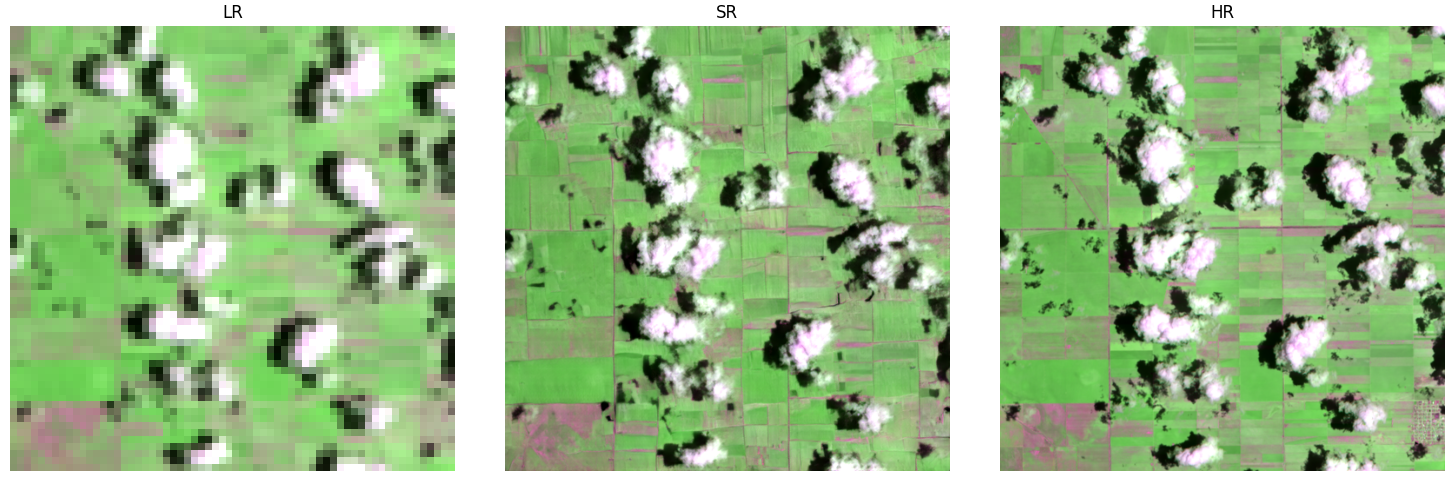

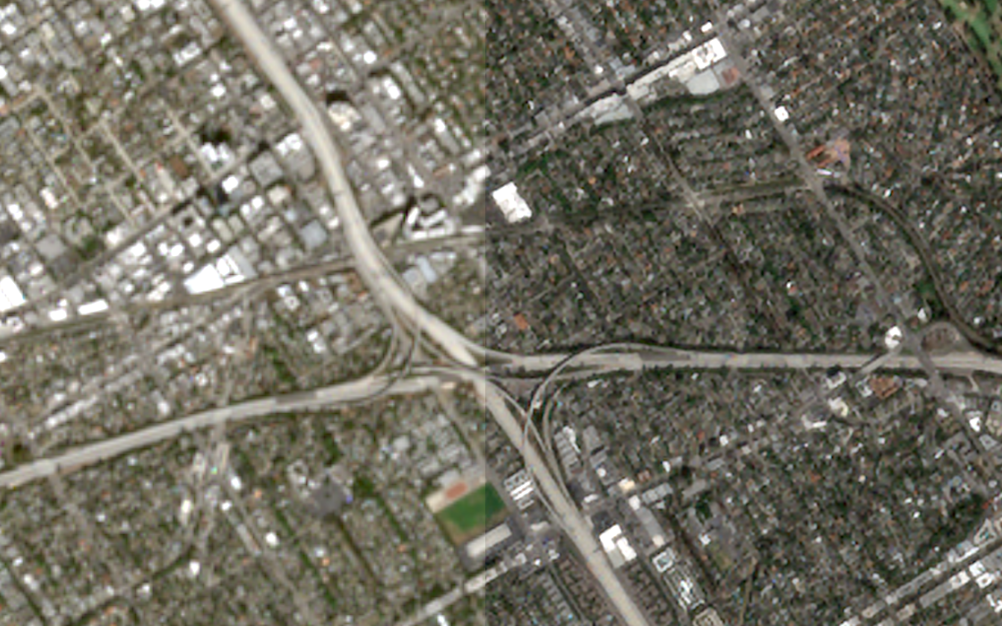

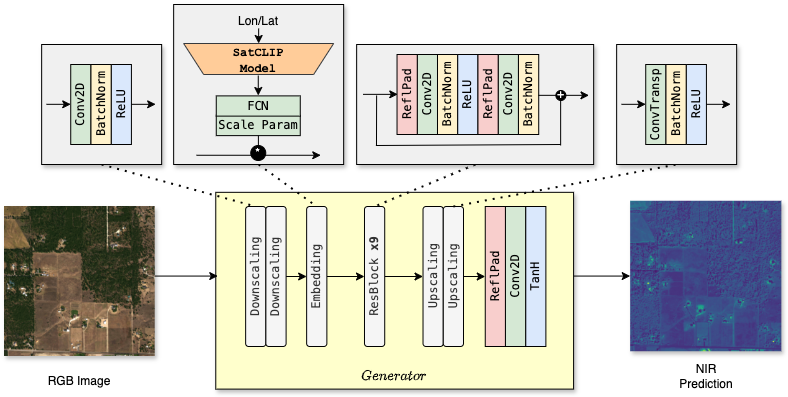

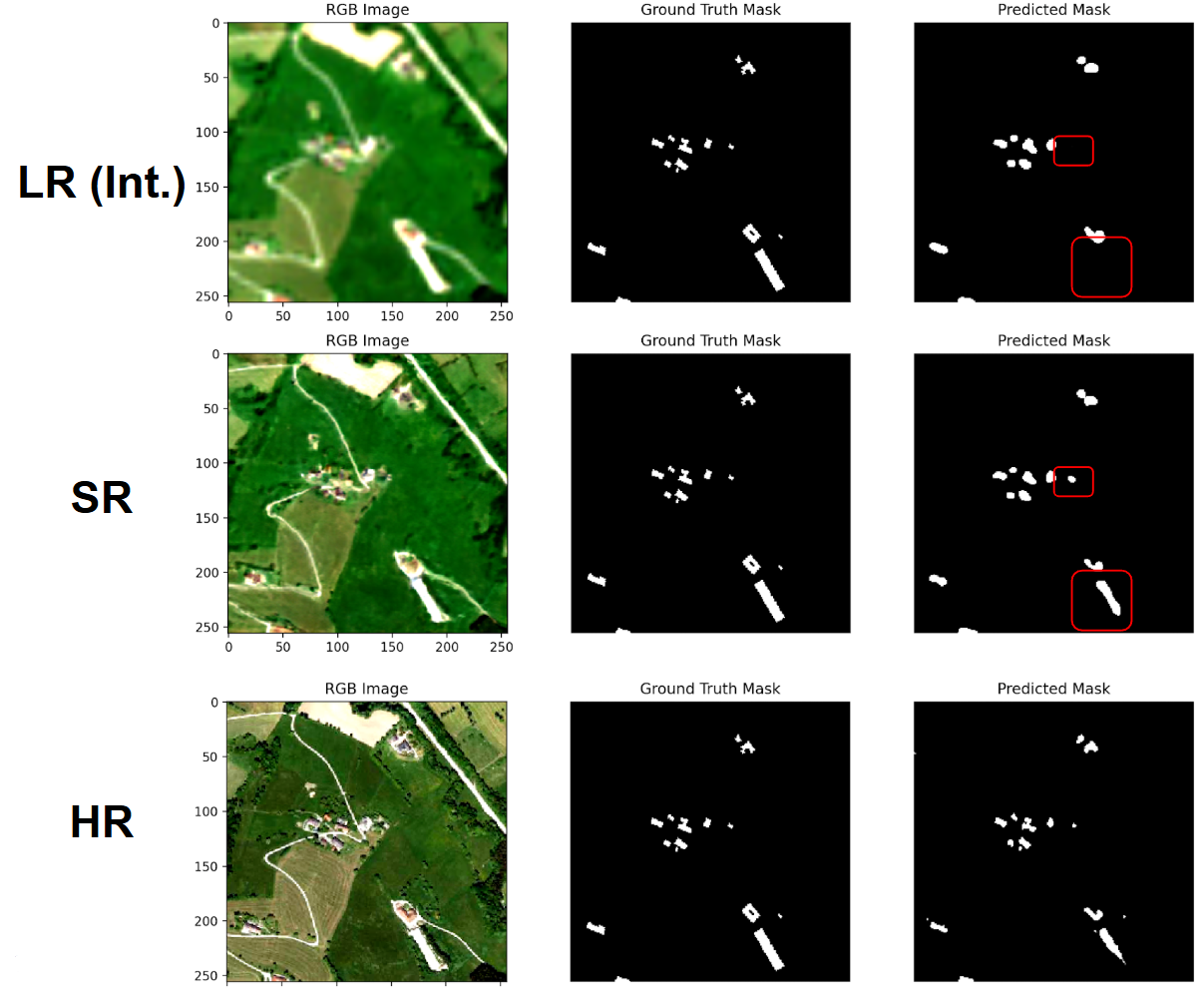

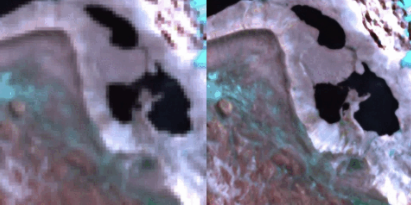

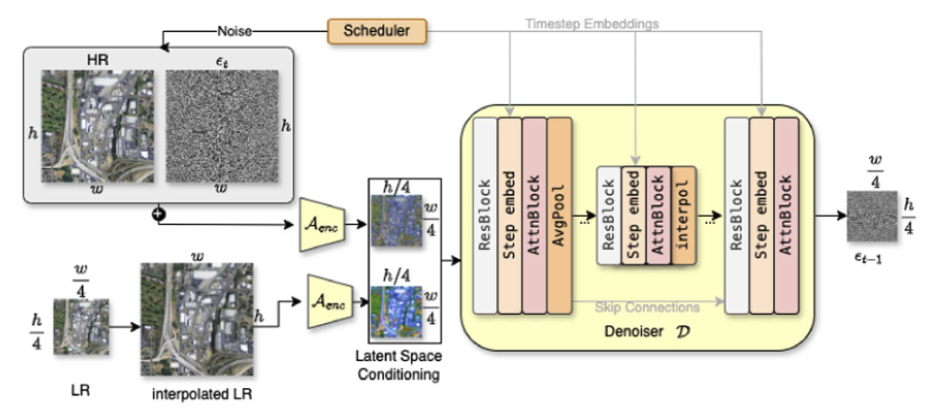

We’re excited to share our latest preprint, “A Radiometrically and Spatially Consistent Super-Resolution Framework for Sentinel-2,” now available on SSRN! This work introduces SEN2SR, a novel deep learning framework designed to generate high-resolution (2.5 m) Sentinel-2 imagery while maintaining spectral integrity, spatial alignment, and geometric consistency—three common weaknesses of existing super-resolution (SR) models.

What’s New in SEN2SR?

Unlike many existing SR models that may introduce hallucinations or distort reflectance values, SEN2SR tackles these issues by:

Training on harmonized synthetic datasets tailored to Sentinel-2’s spectral and spatial properties.

Applying a low-frequency hard constraint to ensure that models enhance only high-frequency detail while preserving the original content.

Supporting both 10m and 20m Sentinel-2 bands, including Red Edge and SWIR.

The paper evaluates various model backbones, including CNNs, Swin Transformers, and Mamba, and integrates explainable AI (xAI) methods to provide interpretability and confidence in results. SEN2SR consistently outperforms state-of-the-art SR methods in PSNR, reflectance deviation, and spatial misalignment, and shows strong transferability to downstream tasks like land cover classification.

Read the Preprint

📝 Preprint on SSRN: https://ssrn.com/abstract=5247739

This research not only advances the state of SR in Earth observation, but also emphasizes the importance of trustworthy, artifact-free image enhancement for real-world environmental monitoring.