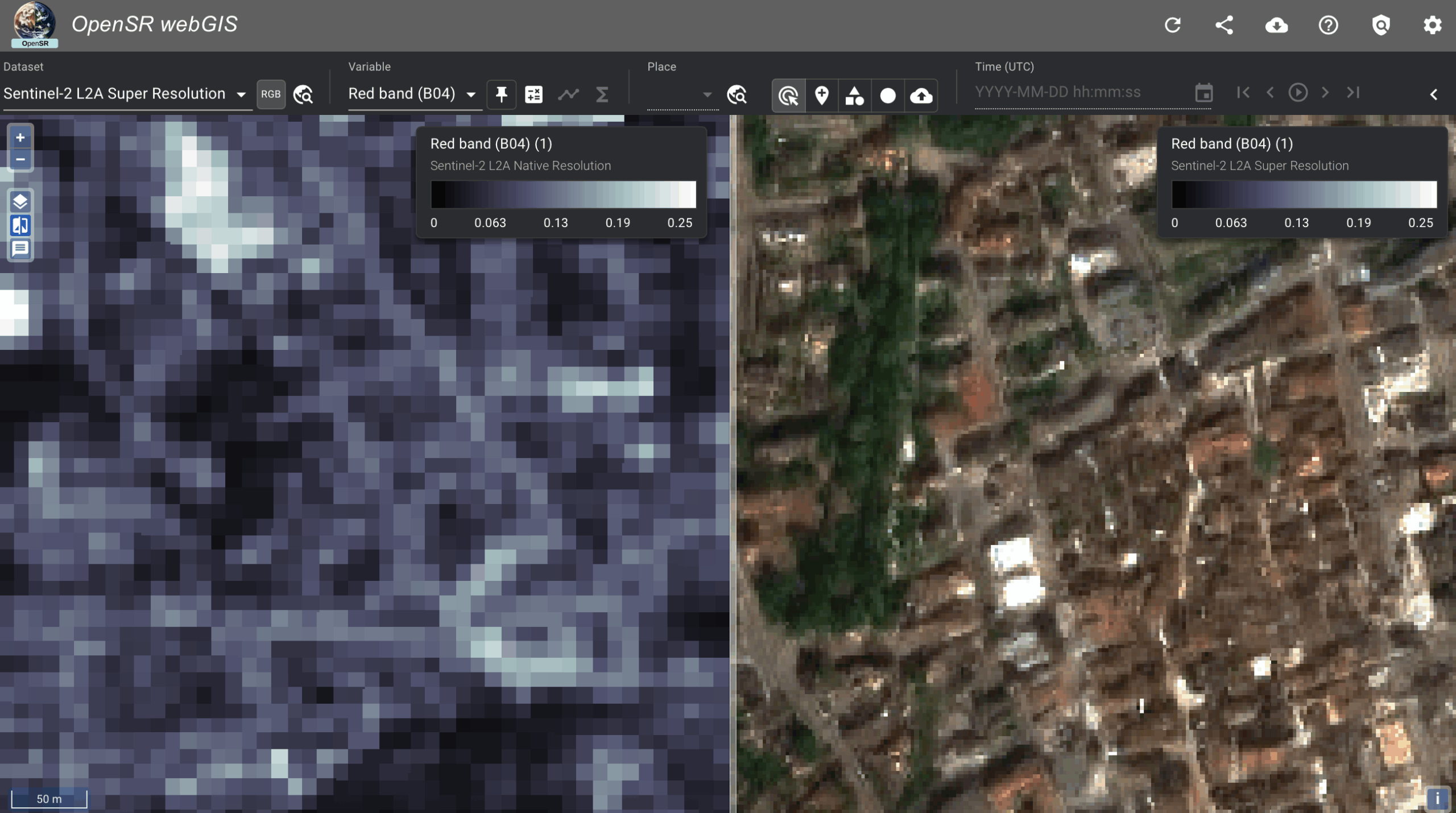

Super-Resolve Sentinel-2 in Your Browser — No Code Required

Have you ever looked at a 10 m Sentinel-2 scene and wished you could zoom in without downloading huge stacks of raw data or writing Python? Now you can. The brand-new LDSR-S2 “no-code” Colab notebook lets anyone—researcher, analyst or student—run state-of-the-art latent-diffusion super-resolution entirely in the browser on Google’s free GPUs.

Why it’s worth a try

Runs on free Colab hardware – one click, no installs, no GPU at home required.

Pick your AOI visually – scroll an OpenStreetMap basemap and click to capture lat/lon, or type coordinates if you already know them.

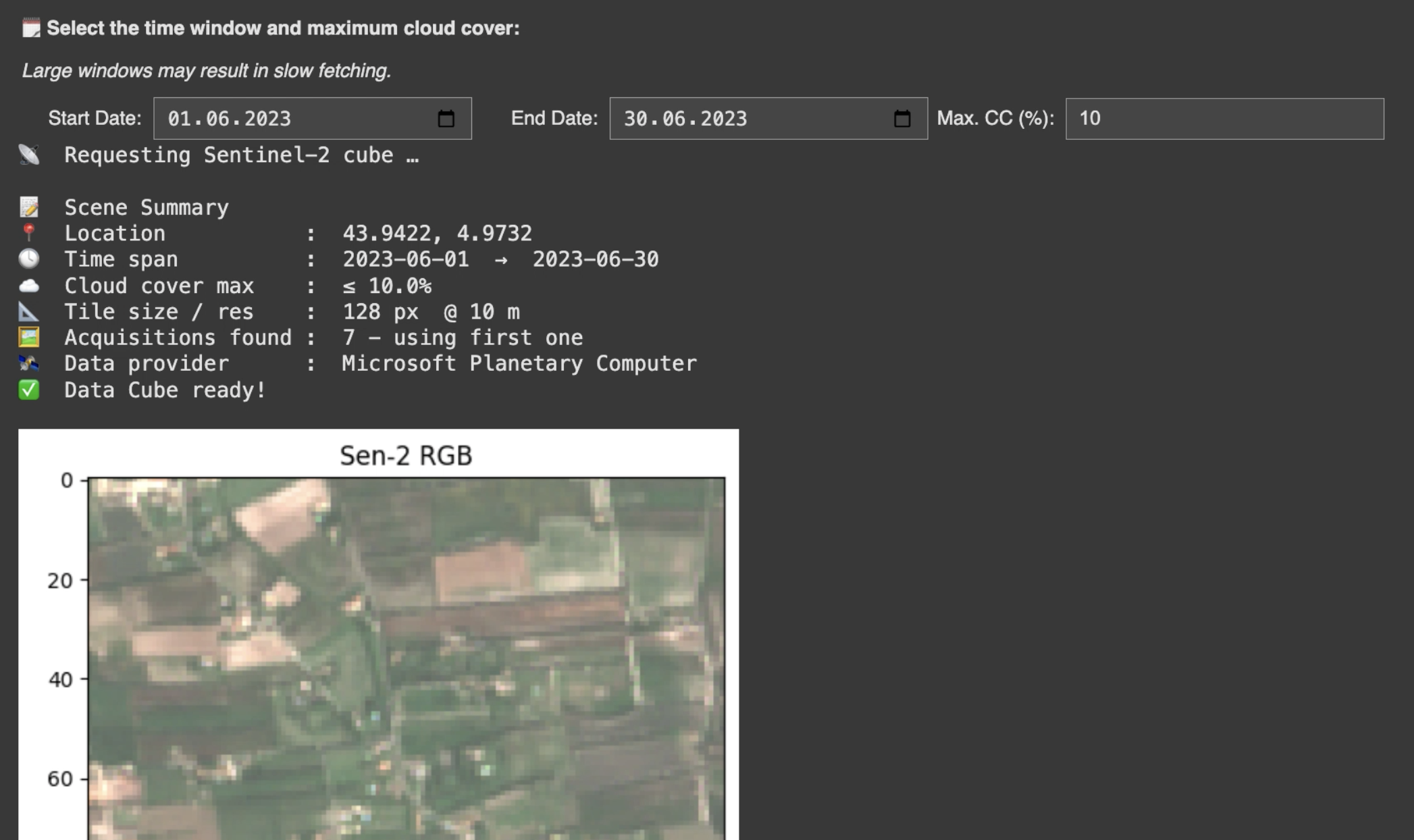

Define your time window & cloud filter – avoid cloudy scenes with a slider (e.g. “max 10 % CC”).

Interactive preview – the notebook shows the native 10 m RGB preview and asks “Does this look OK?” before spending GPU time.

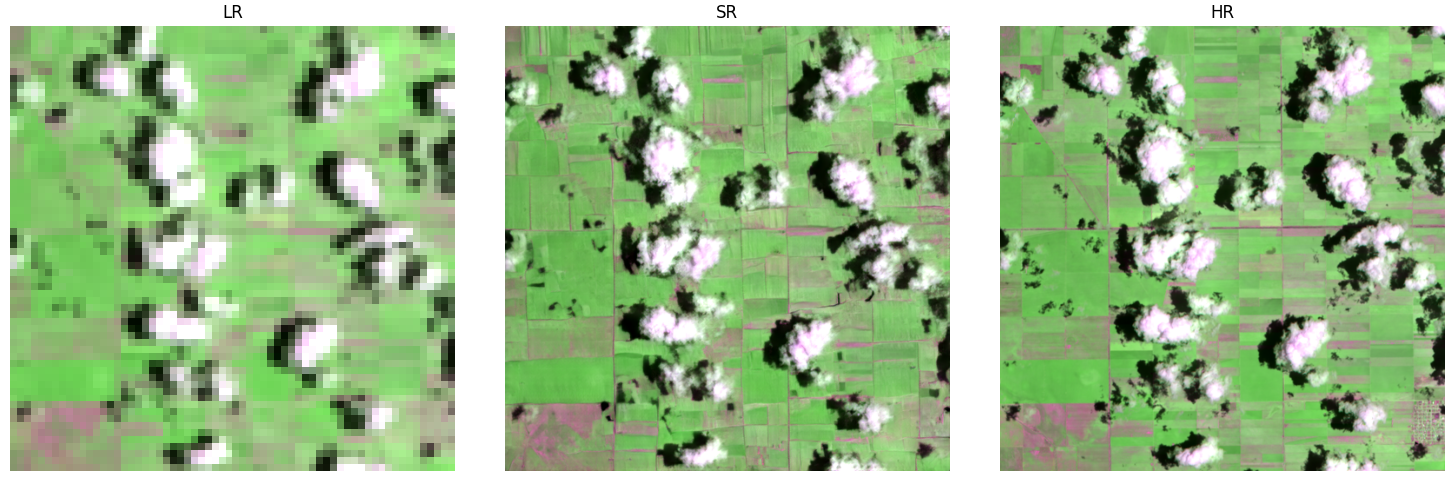

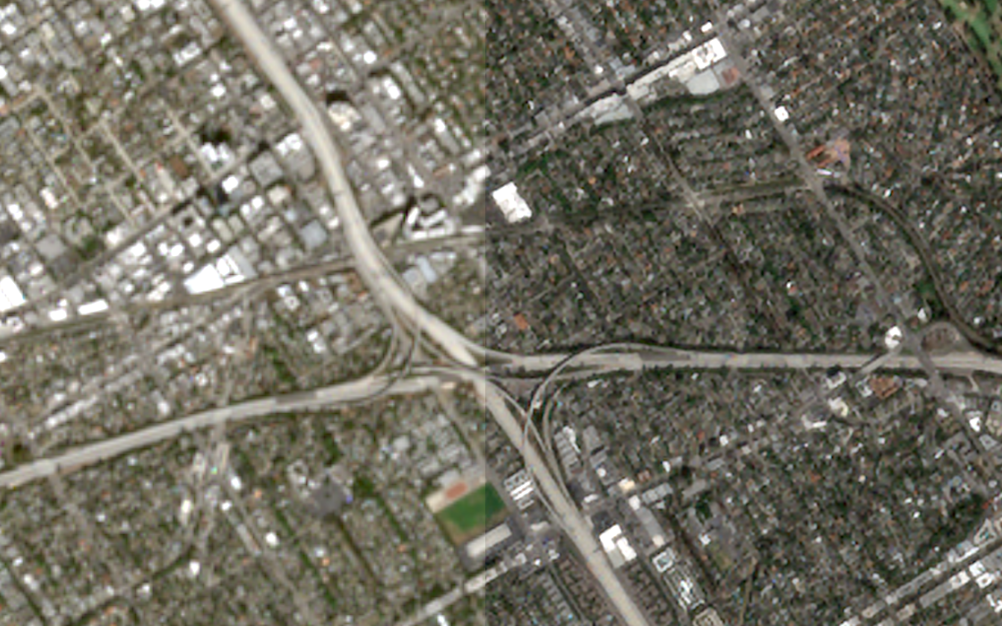

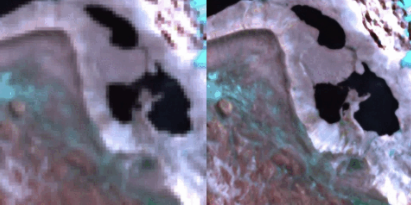

Instant evaluation – in ~30 s you’ll see a side-by-side LR vs. SR PNG and get ready-to-download GeoTIFFs for both. Load them in QGIS/ArcGIS and decide if LDSR-S2 fits your pipeline.

Step-by-Step Guide

| Step | What to do | Tip |

|---|---|---|

| 1 | Open the notebook via the badge above. | You’ll land in Google Colab. |

| 2 | Switch to a GPU runtimeRuntime → Change runtime type → GPU. | Free tier GPUs are fine. |

| 3 | Run every cell:Runtime → Run all. | Colab installs dependencies & loads the model automatically. |

| 4 | In the GUI: Select start/end dates and max cloud-cover. | Narrow windows fetch faster. |

| 5 | Click “Enter coordinates” or “Select on map.” | • Typing coords shows input boxes. • Map lets you pan/zoom and click once. |

| 6 | Hit “Load Scene.” | The S2 10 m preview appears. |

| 7 | If the preview looks good, press “Use this scene.” | Otherwise, choose “Get different scene.” |

| 8 | Wait ~5 s while the GPU works. | |

| 9 | View the LR vs. SR comparison PNG. | PNG is saved as example.png. |

| 10 | Download the GeoTIFFs:lr.tif (native) and sr.tif (2.5 m SR). | Files sidebar → right-click Download.Both files are fully georeferenced and drop straight into QGIS/ArcGIS. |

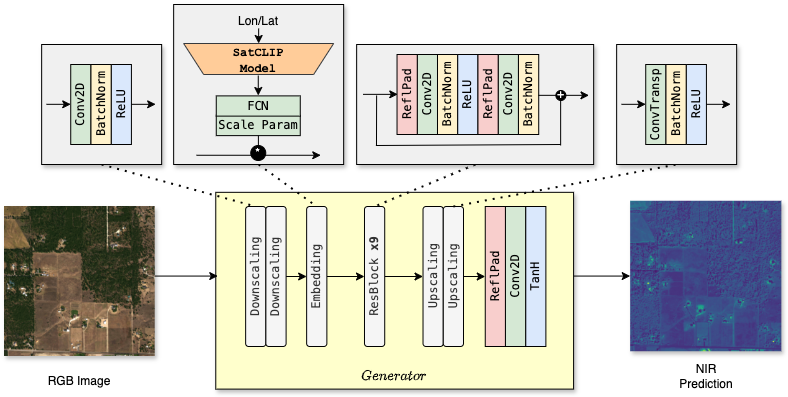

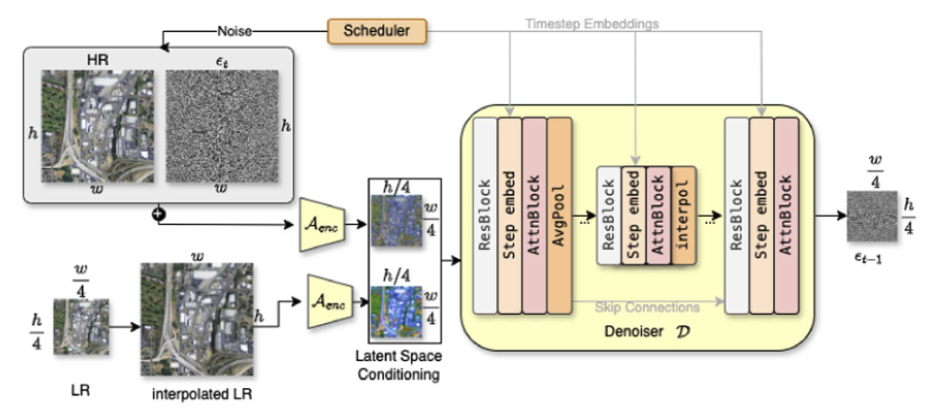

Under the Hood

Data provider: Microsoft Planetary Computer (Sentinel-2 L2A, BOA reflectance).

Model: LDSR-S2 v 1.0 (latent diffusion).

Patch size: 128 × 128 px → 512 × 512 px (10m -> 2.5 m effective spatial resolution).

- Multispectral: RGB+NIR

Outputs:

lr.tif– original 10 m four-band patch.sr.tif– super-resolved 2.5 m four-band patch.example.png– side-by-side LR vs. SR preview.

See if LDSR-S2 Fits Your Use-Case

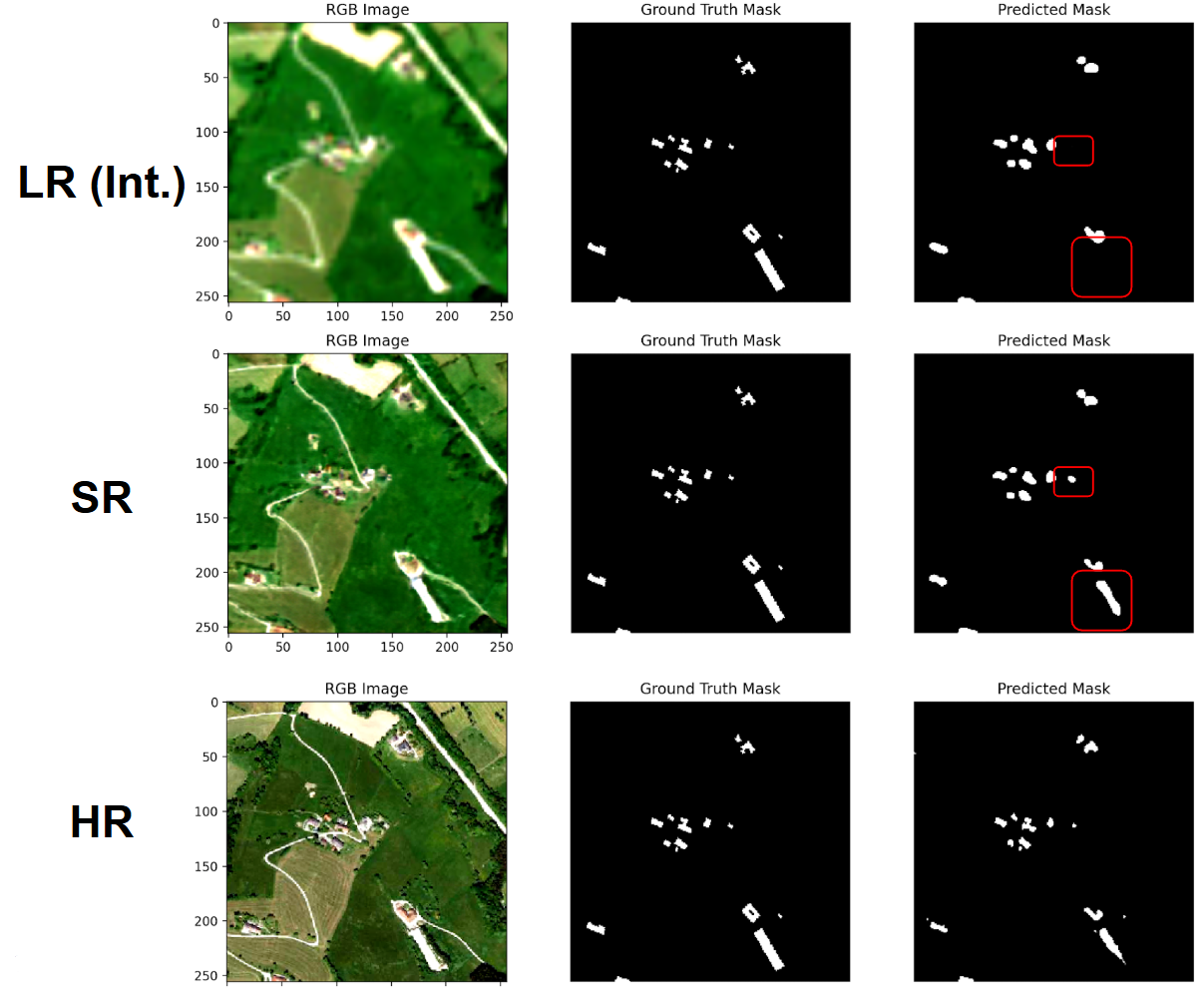

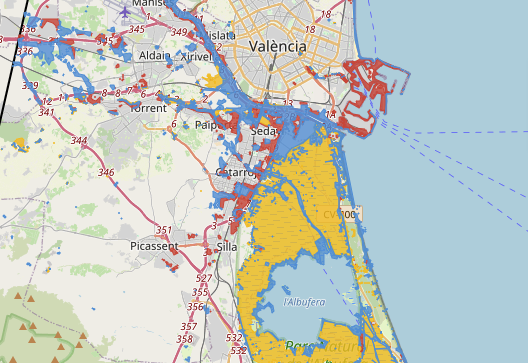

Trying to classify roofs?

Need crisper disaster-response maps?

Curious if SR helps object detection?

Open the notebook, click a location, and find out in minutes—no coding, no local GPU, zero setup. If it works, grab the GeoTIFFs and drop them into your workflow; if not, change dates or pick another AOI and iterate instantly.

Happy super-resolving! Don’t forget to let us know if these results are useful for you!