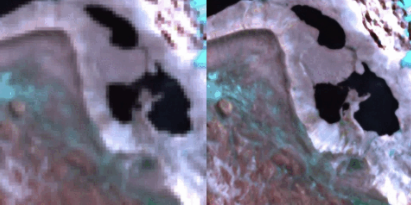

We’re excited to announce our involvement in SUPERIX, the Super-Resolution Intercomparison Exercise—a collaborative effort to quantitatively evaluate super-resolution (SR) methods for Earth Observation (EO) using Sentinel-2 imagery. With SR techniques rapidly gaining traction for enhancing freely available satellite data, SUPERIX aims to go beyond visual comparisons and tackle an essential question: Do SR models truly add meaningful information, or just pretty pictures?

Why SUPERIX?

While SR holds great promise for improving tasks like crop mapping, road detection, and object recognition, concerns remain about its scientific integrity. Some models risk altering reflectance values or hallucinating non-existent features. To ensure the reliability of these methods, SUPERIX proposes a standardized, community-driven benchmark using the OpenSR-test framework—offering real-world datasets and purpose-built metrics.

What’s Involved?

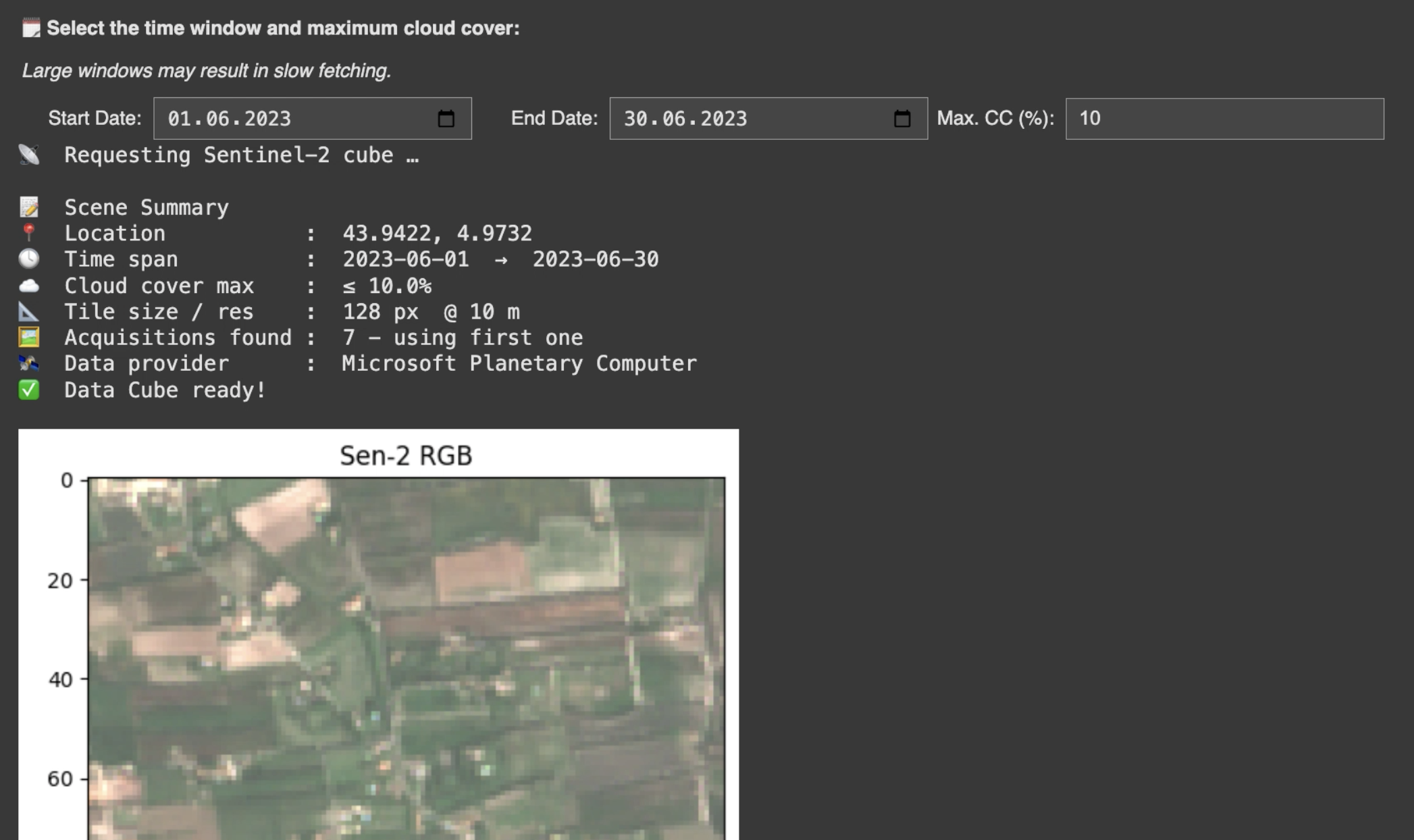

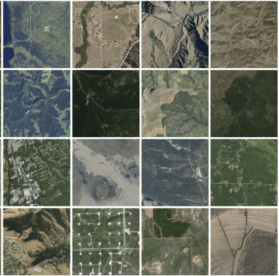

SUPERIX invites contributions from academia, industry, and space agencies. Participating teams will test their SR models against a curated set of high-resolution reference datasets, including:

NAIP (USA, agriculture/forest),

SPOT (urban/rural mix),

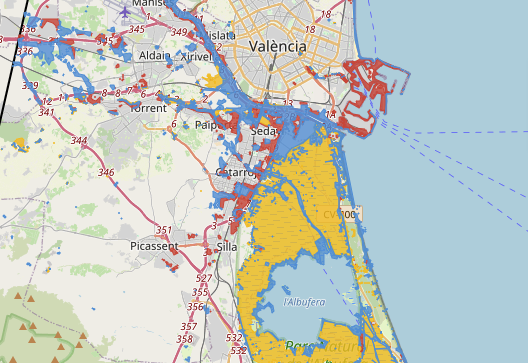

Spain Urban (roads and dense settlements),

Spain Crops (peri-urban agriculture), and

VENµS (multispectral, global).

Each dataset includes corresponding Sentinel-2 L1C/L2A images for realistic benchmarking.

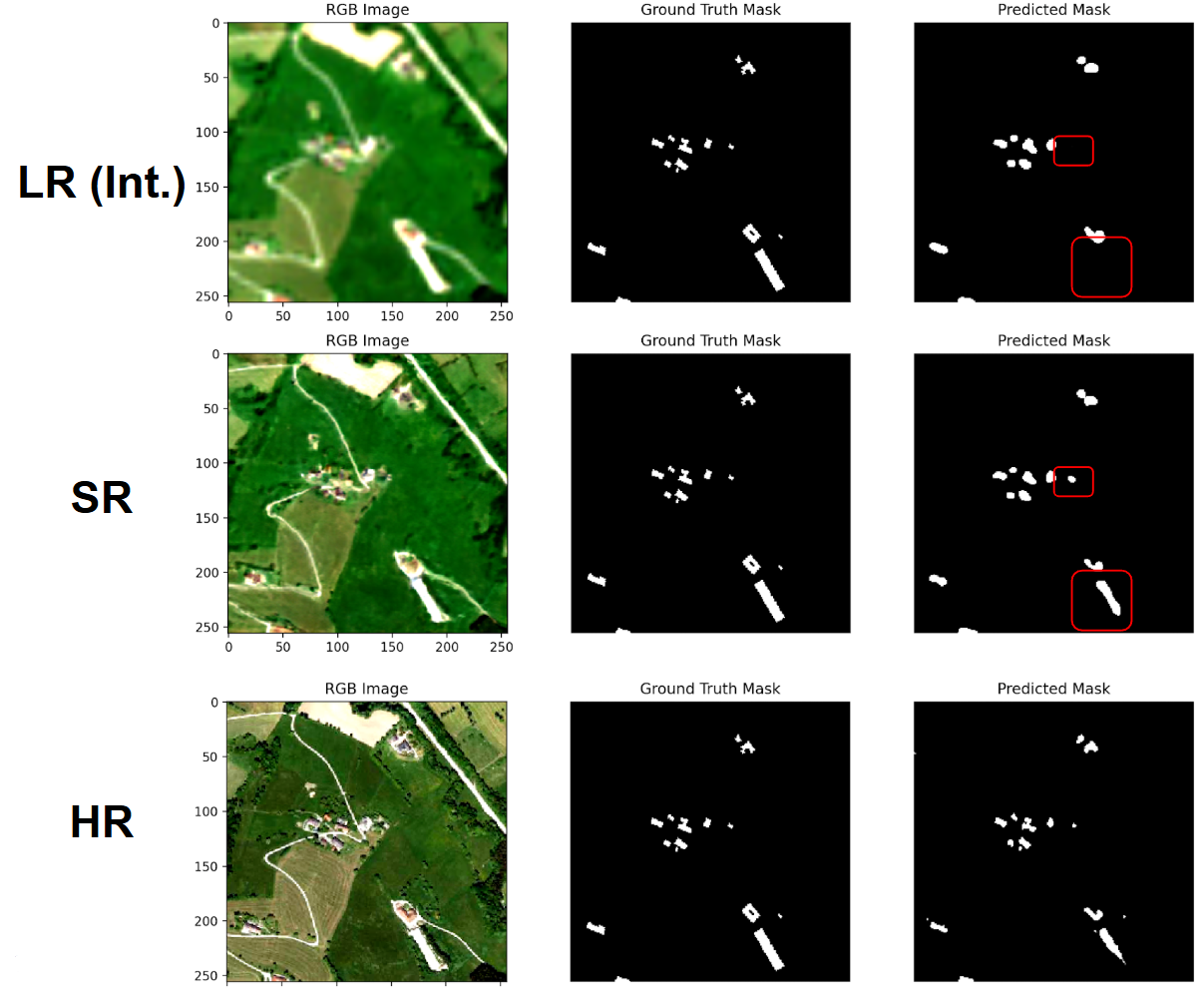

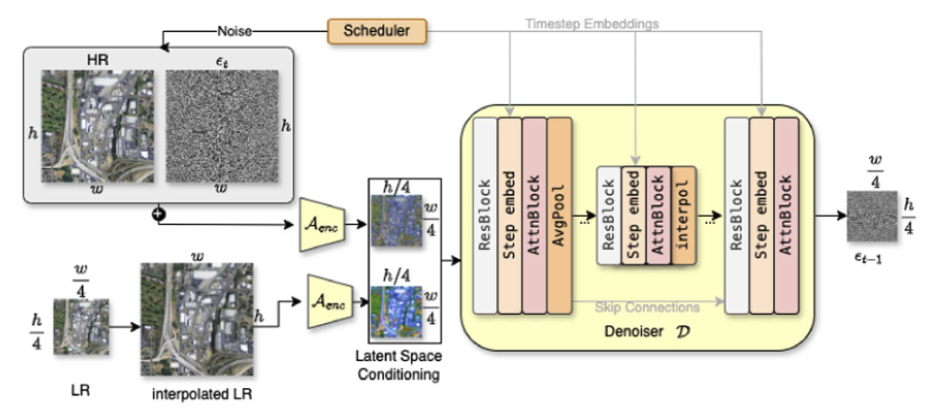

How Will SR Be Evaluated?

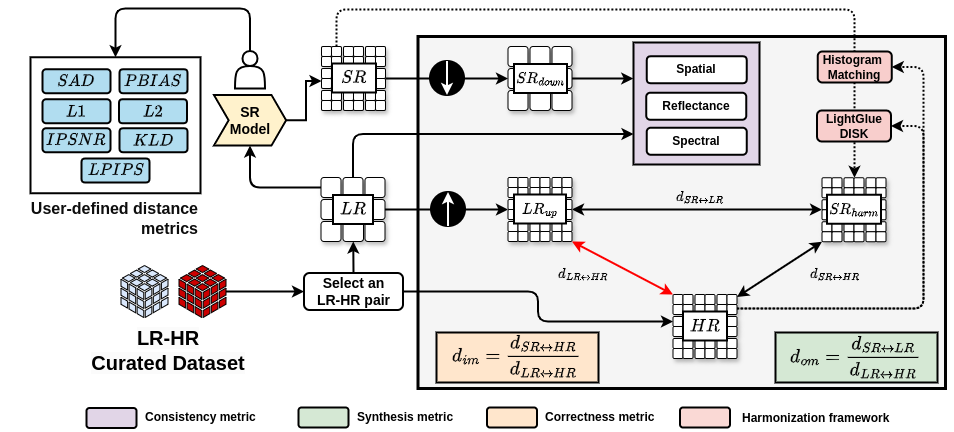

Metrics are grouped into two main categories:

1. Consistency with Original Data

Reflectance (MAE): Measures how well reflectance values are preserved.

Spectral (SAM): Evaluates spectral integrity.

Spatial (PCC): Detects spatial shifts between SR and LR images.

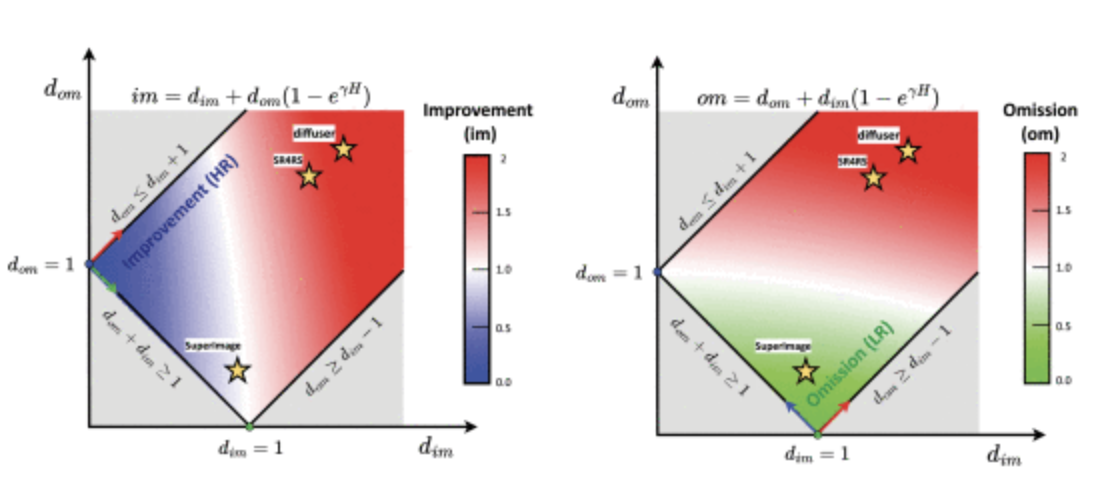

2. High-Frequency Detail Analysis

Improvements (im_score): True high-frequency recovery.

Omissions (om_score): Missed details compared to HR.

Hallucinations (ha_score): Introduced but incorrect details.

Evaluations will run at both x2 and x4 scale factors, using MAE and LPIPS to compare fine details from different angles—intensity and perceptual structure.

Submission Protocol

Teams may submit open-source (code included) or closed-source (GeoTIFF results only) models via GitHub pull requests. Submissions must include a metadata file with details about the model, authorship, scale factor, and licensing.

After submissions are reviewed and metrics are computed, results will be shared with contributors for validation, followed by a public release via:

A dedicated website,

A technical report, and

A peer-reviewed research publication.

Get Involved

Whether you’re developing cutting-edge diffusion models or fine-tuning lightweight CNNs, SUPERIX is your chance to test your approach against the best in the field. It’s not just about winning—it’s about understanding where your model stands and helping the community advance EO SR together.

Interested in participating?

Reach out to the SUPERIX team. Let’s build a fair, open, and insightful benchmark for the future of remote sensing super-resolution.